The field of artificial intelligence is currently dominated by Generative AI, and particularly large language models (LLMs) like GPT-4 and Google Bard. By demonstrating an impressive ability to generate text that mimics human-like responses they have sent the world into a frenzy and are already proving useful in almost every field.

However, despite their undeniable success, LLMs are not without their problems and limitations. Even with their achievements, there is a growing realisation that LLMs might not experience significant improvements beyond their current state. Recently, OpenAI’s CEO, Sam Altman, declared that the era of LLM is already over. Of course, this does not mean that Generative AI and natural language processing (NLP) are not going to make any more progress. Rather, they will have to evolve by exploring new directions. But before we venture beyond large language models, let’s take a closer look at their current limits.

6 limitations of large language models

1. Lack of Common-Sense Understanding:

While LLMs excel at generating coherent and contextually relevant text, they lack fundamental common-sense understanding. These models are built on top of deep learning therefore they rely primarily on statistical patterns and correlations present in the vast amount of data they are trained with. But a lot of the information and intricacies of everyday knowledge – that humans acquire intuitively – is not statistical in nature, and therefore it evades LLM. This means that LLMs do not exhibit any true grasp of reality and have very limited abilities to reason or comprehend nuanced contexts. They are great at performing surface-level text generation, but they do not ‘understand’ what they are writing. This limitation hinders their ability to engage in deep conversations, understand sarcasm or irony, and apply real-world knowledge effectively.

2. Prone to Biases and Ethical Concerns:

Large language models – like all deep learning – are prone to inheriting biases present in the training data. If the data reflects societal biases or prejudices, the model’s outputs will do as well, and may even amplify them. Addressing bias in LLMs is a complex challenge that requires the curation of training data, as well as the development of robust methods to identify and mitigate bias during model training. Obviously, the larger the training data becomes, the harder it is to rid it of bias.

3. Insufficient Contextual Understanding:

LLMs struggle with deep contextual understanding. They may generate responses that are contextually appropriate in a general sense but fail to capture the nuances and specificities of a given context. This limitation becomes apparent when LLMs are faced with ambiguous queries or complex reasoning tasks.

4. Lack of Explainability and Interpretability:

The complexity of LLMs, which operate as complex neural networks with billions of parameters, makes it challenging to interpret and explain their decisions. The inner workings of LLMs are ‘black boxes’, that provide very limited visibility into how and why specific answers are generated. The lack of explainability raises concerns, especially in sensitive applications such as legal, healthcare, and finance, where transparency and accountability are of critical importance.

5. High Computational Resources and Environmental Impact:

Training and deploying LLMs require massive resources, both in computational terms, which means very powerful hardware, and in terms of energy consumption. Moreover, scaling up model size results in diminishing returns, and we are already reaching the limits on how many parameters, how much training data, and how much computational power and energy is possible to use to train them.

The environmental impact of these models cannot be overlooked, as the carbon footprint associated with their development and usage is very significant. A previous version of GPT was said to use a quantity of energy similar to that consumed by New York City in a month, every time it was trained. Finding more sustainable approaches to training LLMs and exploring efficient model architectures are essential steps towards reducing their ecological footprint and guaranteeing their future viability.

6. Data Privacy and Security Concerns:

Finally, the fact that LLMs require access to vast amounts of data to achieve their impressive performance, raises concerns regarding data privacy and security. It is hard to guarantee that there are not any sensitive data in their training sets. Therefore, safeguarding against potential data breaches and developing privacy-preserving techniques are critical considerations when deploying LLMs in real-world applications.

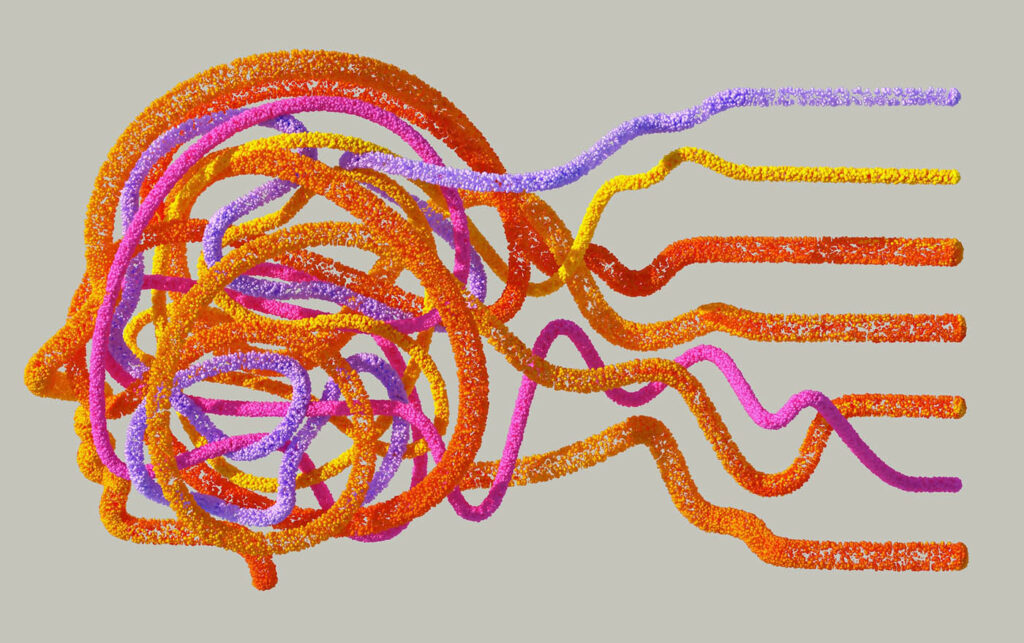

Beyond Large Language Models: The Future of AI

To overcome the limitations of LLMs and pave the way for the next phase of AI, researchers are exploring various avenues. One promising direction is the acknowledgement that the data humans use every day is only in minimal part digital, and even less of it is textual.

Integration of multimodal learning, which combines text with other modalities such as images, video, and audio is a way of opening up learning to even more vast amounts of information. And by incorporating additional context, AI systems can better understand and interpret human language in a broader sense.

This will require advancements in fields like computer vision and video analysis, which would open the door to even better speech recognition, enabling more immersive and comprehensive interactions with AI. However, the multimodal approach to AI would make the problems associated with scale even worse, if not supported by other enhancements that can help make training leaner.

As a consequence, the focus should be on the development of more efficient and environmentally sustainable methods for training AI models. Researchers are exploring approaches such as few-shot learning, transfer learning, and meta-learning. These techniques aim to reduce the reliance on massive datasets and computational resources while maintaining high performance. By leveraging prior knowledge and transferring learning across domains, these methods can make AI more accessible and practical for a wider range of applications, while also addressing concerns about energy consumption and environmental impact.

In order to ensure transparency while overcoming LLMs’ inability to understand context and generalise, AI research is increasingly focused on augmenting deep learning techniques with Symbolic AI. This approach falls under the name of Hybrid AI.

The history of AI has been largely dominated by two main paradigms: connectionism and Symbolic AI. While the connectionist paradigm is associated with processing vast amounts of data and learning a statistical model from it, the symbolic paradigm is founded on knowledge representation, reasoning, and logic.

Symbolic AI – which relies on explicit rules and logical inference to solve problems and perform higher-level cognitive tasks – was once dominant, until researchers realised that the number of rules and facts that needed to be specified to power these symbolic AI systems was enormous, and that any oversight was potentially disastrous. Symbolic AI was put aside as an approach after the second AI Winter, approximately in the early 1990s, but today there is a growing realisation that the answer to making progress may lie in unifying statistical and logic approaches.

By leveraging prior knowledge and transferring learning across domains, these methods can make AI more accessible and practical for a wider range of applications, while also addressing concerns about energy consumption and environmental impact.

The ability of statistical models to recognise patterns, correlations, and relationships in the data, and encode them in their learned representations can be used to automatically extract the symbolic rules emerging from the data. In other words, the process of defining the rules of a symbolic system, which used to be manual and daunting, can be automated through ML. The connectionist subsystem of Hybrid AI acts therefore as a data-driven rule generator and provides continuous feedback to update and refine the symbolic subsystem as new data becomes available.

By integrating these two paradigms, AI systems can leverage the strengths of both approaches. Deep learning models can learn from vast amounts of data, while symbolic reasoning provides a framework for logical deduction and complex problem-solving.

This has the potential to also solve the explainability and interpretability problems of deep learning because the resulting models can explain their outputs in terms of the rules that have been applied to the decisions.

What is next for AI?

While generative Ai and large language models are presently catalysing interest and have created the premise for a new explosion of interest and investments, they may be about to plateau in terms of performance. However, as we look ahead, it becomes clear that the potential of AI can extend beyond these models, to multimodal and sustainable learning, and most of all to Hybrid AI. The progress in these areas will be instrumental in creating future AI systems that are more sustainable, versatile, and vastly more capable and general than those we have today.